Remotely Installing OpenBSD on a Headless Linux Server

I recently activated a new dedicated server that came preinstalled with Linux, as the hosting provider didn't support OpenBSD. Since they also didn't provide an IP-based KVM without purchasing a dedicated hardware module (though most of the IP-KVMs I've used recently require interfacing with some terrible Java-based monstrosity anyway), I needed a way to remotely install OpenBSD over the running Linux server.

YAIFO

I've previously used YAIFO to do remote

OpenBSD installations, which basically adds an SSH daemon to the OpenBSD

installer image and brings up a network interface that is manually configured

before compiling the image. The image is then dd'd directly to the hard

drive while running whatever OS is on the system, the system is rebooted, and

if all went according to plan, the machine will boot into OpenBSD and present

you with SSH access so you can run the installer.

Unfortunately YAIFO hasn't been updated in a few years and wouldn't compile on 5.5. I forked it and took some time updating it to compile on 5.5, but for some reason the final image wouldn't boot properly on the new server or inside a local VMware. Since YAIFO is basically a "spray-and-pray" option where one has to overwrite the beginning of the hard drive, reboot it, and hope it starts responding to pings again, I was basically out of luck when it wouldn't boot properly.

This hosting provider has a recovery option in their web control panel where it will reboot the server and load a small Linux distribution over PXE, bring up your server's IP, and then start an SSH daemon with a randomly assigned root password. I activated this recovery mode and was able to SSH into the server, which now appeared to be running GRML, a small distribution based on Debian.

QEMU

Since the recovery image does everything with a big ramdisk to avoid touching the server's hard drives, it had plenty of space (RAM) to install other packages which gave me the idea to install QEMU on it.

root@grml:~# df -h

Filesystem Size Used Avail Use% Mounted on

rootfs 4.0G 564M 3.4G 15% /

udev 10M 0 10M 0% /dev

tmpfs 1.6G 260K 1.6G 1% /run

/dev/loop0 210M 210M 0 100% /lib/live/mount/rootfs/grml.squashfs

tmpfs 4.0G 0 4.0G 0% /lib/live/mount/overlay

tmpfs 4.0G 0 4.0G 0% /lib/live/mount/overlay

aufs 4.0G 564M 3.4G 15% /

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 1.6G 0 1.6G 0% /run/shm

root@grml:~# apt-get update

[...]

root@grml:~# apt-get install qemu

[...]

Need to get 52.5 MB of archives.

After this operation, 232 MB of additional disk space will be used.

Do you want to continue? [Y/n] y

[...]

Now that QEMU was installed, I fetched and manually verified the checksum of

the OpenBSD 5.5 miniroot55.fs image (though one of the downloadable OpenBSD

CD images could also be used):

root@grml:~# wget http://mirror.planetunix.net/pub/OpenBSD/5.5/amd64/miniroot55.fs

[...]

root@grml:~# sha256sum miniroot55.fs

27b4bac27d0171beae743aa5e6e361663ffadf6e4abe82f45693602dd0eb2c9f miniroot55.fs

Now I could boot QEMU passing miniroot55.fs as the first hard drive, then

/dev/sda as the second and /dev/sdb as the third. I used user-space

networking to assign a virtual NIC to the QEMU image since it would need to do

NAT through the Linux host which was using the one IP my server had assigned.

root@grml:~# qemu-system-x86_64 -curses -drive file=miniroot55.fs -drive file=/dev/sda -drive file=/dev/sdb -net nic -net user

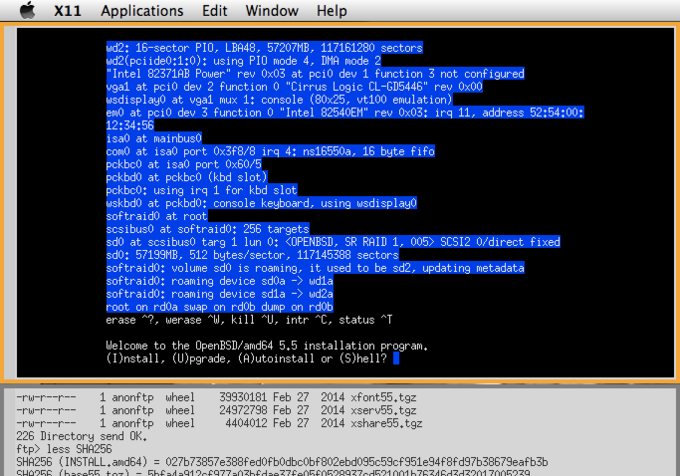

This gave me a wonderfully useful curses interface to OpenBSD running inside

QEMU while still having direct access to the hard drives on the physical

machine. I did the OpenBSD installation as normal, after manually assembling a

softraid mirrored array of the two hard drives (which showed up as wd1 and

wd2 in the VM).

After completing installation but before rebooting, I changed the

hostname.em0 and mygate files on the installed system to use the server's

assigned IP and gateway, since they were set to DHCP (through QEMU) during

installation because the live IP was already being used by the Linux host.

I exited QEMU (ESC+2, "quit") after halting, and then rebooted the physical

server which reverted it back to booting to its primary hard drive, which

booted my new OpenBSD installation.

Caveats

This type of installation requires knowing exactly what kind of network cards

are in the server, since you will have to manually rename the hostname.*

files before rebooting. By checking the dmesg of the Linux recovery image,

you can see the PCI id of the NIC and verify that they match a particular

driver in OpenBSD.

If OpenBSD does not boot properly (or can't bring up networking) after

installation, it should be fairly easy to again use the Linux recovery option,

install QEMU, and boot to the first raw hard drive to make sure it comes up.

If so, check the previous dmesg logged to /var/log/messages inside the VM

to verify that the NIC was configured properly.