Live Streaming a Macintosh Plus (or Any Compact Mac)

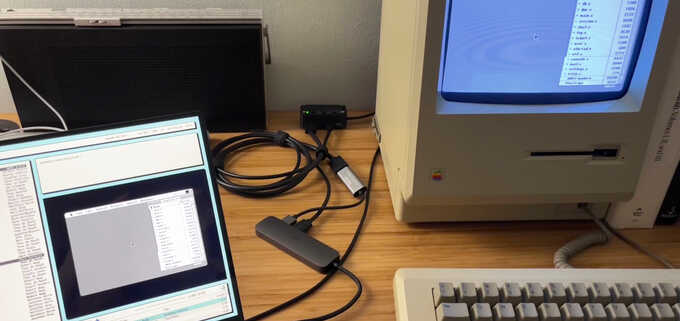

Since recording a handful of C Programming on System 6 videos, I've occasionally wanted to live-stream the more casual daily programming being done on my Macintosh Plus. After getting all of the pieces together, I now have a working self-hosted broadcasting setup.

If I happen to be programming on my Mac right now, you can watch here at my website.

Table of Contents

Getting Video From a Macintosh Plus

Back in 2020, I experimented with writing a pseudo-driver for my Macintosh Plus that would send its screen data over the SCSI port to a Raspberry Pi with a RaSCSI hat, which would then display the data on the Pi's framebuffer, making it possible to capture the Mac's screen through the Pi's HDMI output. This proved to be too slow to work and ate a lot of processing time on the Mac (though it was admittedly not well optimized), so I went back to recording videos with an actual camera (an iPad).

After watching Adrian Black's video in 2021 about using an RGBtoHDMI device to get video from a Macintosh Classic, I tried to get one but they were not being sold anywhere. The RGBtoHDMI is a board that attaches to a Raspberry Pi Zero's GPIO header and uses a CPLD to process analog or TTL digital video from a variety of sources and then feeds it to some bare-metal software running on the Pi to display on the Pi's HDMI output.

Building my own RGBtoHDMI ended up taking me quite a while. I first had to send the open source PCB designs to PCBWay for printing (and I now have 9 extra PCBs) and then source all of the components. The most important component, the Xilinx XC9572XL CPLD, was also the hardest to acquire due to the worldwide component shortage. Finally, assembly required soldering a bunch of tiny capacitors and the CPLD's 44 pins but I had never done surface-mount soldering before, so I was a bit nervous.

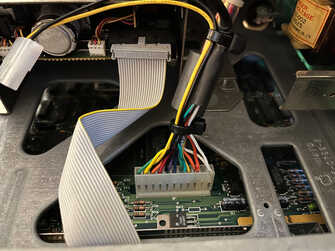

With the RGBtoHDMI working, I needed to connect it to my Mac Plus. I soldered in four wires on the wiring harness that connects the Mac Plus's analog and logic boards, tapping into the monochrome video signal, horizontal sync, vertical sync, and ground. (These wires carry only low-voltage TTL signals so it should be pretty safe, but don't take my word for it.)

| Macintosh Plus | Wire | RGBToHDMI |

|---|---|---|

| Video - White (1) | White | GRN2 (Video) |

| Horizontal Sync - Orange (3) | Red | HS (Horizontal Sync) |

| Vertical Sync - Green (5) | Green | VS (Vertical Sync) |

| Ground - Dark Purple (7) | Black | 0V (Ground) |

I added heat-shrink tubing around the wires and ran them through the locking desk connector on the rear of the Mac. I also 3D-printed a case for the Raspberry Pi Zero and RGBtoHDMI.

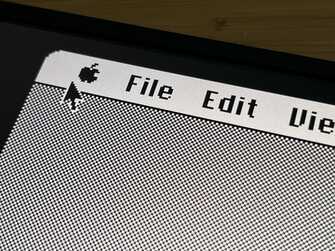

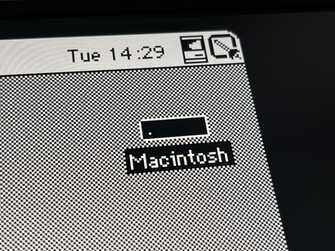

Once the wires were correctly hooked up and the RGBtoHDMI was tuned with the clock settings from Adrian's video, I had a pixel-perfect copy of my Mac Plus's 512x342 screen appearing in real-time on an HDMI screen (with padding to fill its 640x480 framebuffer).

HDMI Capture

I purchased a

cheap $20 HDMI capture device

(with the most unfortunate

Markov Chain brand name)

which presents an HDMI source as a standard USB webcam.

On my OpenBSD laptop, it appears as a uvideo USB device with accompanying

uaudio:

uvideo1 at uhub5 port 4 configuration 1 interface 0 "MACROSILICON USB3. 0 capture" rev 2.00/21.00 addr 14

video1 at uvideo1

uaudio1 at uhub5 port 4 configuration 1 interface 3 "MACROSILICON USB3. 0 capture" rev 2.00/21.00 addr 14

uaudio1: class v1, high-speed, sync, channels: 0 play, 1 rec, 1 ctls

audio2 at uaudio1

uhidev4 at uhub5 port 4 configuration 1 interface 4 "MACROSILICON USB3. 0 capture" rev 2.00/21.00 addr 14

uhidev4: iclass 3/0

uhid7 at uhidev4: input=0, output=0, feature=8

I was able to use the

video(1)

utility to see a pixel-perfect (when using YUY2 encoding) copy of the Mac's

screen.

In my testing, this cheap HDMI capture device was fully capable of displaying

the Mac's screen at 30fps, though it is of course only a 640x480 1-bit video

stream.

I don't know whether it (or OpenBSD) is capable of keeping up with a much higher

resolution, full-color source like capturing a game.

On modern macOS, the HDMI source appears as a normal USB webcam available through any program that can access a webcam such as QuickTime or VLC.

Streaming

In my usual fashion of wanting to retain control over my content, I didn't want to outsource my streaming to YouTube or Twitch.

Since the black and white screen data is so compact (a full screen as a 1-bit

PNG is between 5 KB and 9 KB) and I wanted to avoid blurring it by compressing

it as a video stream, I started

writing a program

to read the YUY2 data directly from the device, discard unnecessary pixel data,

and then send it to a program running on my server that would serve it through

WebSockets.

The idea was that some Javascript could read the WebSocket data as a stream and

directly draw each frame on a <canvas> element.

I eventually scrapped that idea and turned to a more traditional streaming

setup with FFmpeg and the wonderful

rtsp-simple-server.

After much reading documentation, testing, and guessing at why Firefox wouldn't

play any video that I was sending (it is unable to play avc1.f4001e video), I

reached a solution that works:

ffmpeg -f v4l2 -i /dev/video1 \

-c:v libx264 -profile:v main -pix_fmt yuv420p -preset ultrafast \

-filter:v "crop=512:344:64:69" \

-max_muxing_queue_size 1024 -g 30 \

-rtsp_transport tcp \

-f rtsp rtsp://user:pass@jcs.org:8554/live

This instructs FFmpeg to read from the HDMI capture V4L2 device at

/dev/video1, encode it as x264 video with a pixel format of YUV420p needed

for Firefox, after cropping it down from 640x480 to 512x344.

It sends that stream as an RTSP TCP stream to rtsp-simple-server which is

running on my server, which then serves it as an

HLS stream.

To have FFmpeg broadcast while also saving a copy of the stream to a local file,

its tee output can be used instead:

[...]

-rtsp_transport tcp \

-f tee -map 0:v "stream.mp4|[f=rtsp]rtsp://user:pass@jcs.org:8554/live"

The Mac's screen is 512x342, but for whatever reason I can't get it to encode properly at that resolution without adding a 1-pixel black bar at the top, making it 512x343. I made it 512x344 just to make it even, and then adjust the video position on my website to show it as 512x342.

I'm not sending any audio to the stream, but doing so would just be an extra

-c:a flag to ffmpeg to also mux in audio from my laptop's microphone.

Maybe I'll do this with just ambient audio of my keyboard and mouse clicks when

I'm not listening to music.

Since the RGBtoHDMI software runs directly on the Raspberry Pi, it boots and starts displaying HDMI data within a couple seconds of receiving USB power without having to wait for a full Linux kernel to boot.

Once the video is available, I can run the FFmpeg script and start streaming to rtsp-simple-server which then makes the stream available to anyone with my Live page open. Since I'm not using OBS or other heavy video muxing process, it's all very lightweight and my fanless OpenBSD laptop handles streaming while playing music and running my web browser without any issues.

On my macOS laptop, FFmpeg can read the HDMI USB device through its AVFoundation plugin:

ffmpeg -f avfoundation \

-framerate 30 -pix_fmt uyvy422 -video_size 640x480 -i 0:none \

...

Server

On the server side I have nginx serving my usual website, but requests for

things in the /live directory are proxied back to rtsp-simple-server which

handles all of the HLS processing and serving of the individual video chunks and

playlist generation.

I am using

hls.js

to do the actual HLS processing in the browser since only Mobile Safari has

native support for a <video> tag with an HLS stream as its source.

I would have liked to do everything without Javascript, but even my non-video

solution with WebSockets would have required it.

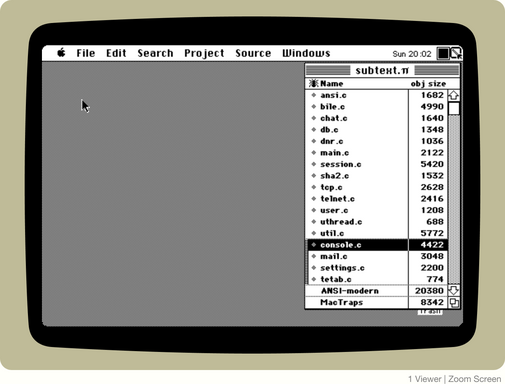

I added some little flourishes on the Live page like drawing a Mac monitor around the video stream, similar to my Amend and Wallops screenshots. I also used a CSS transition to "turn the screen on" when the video starts playing by fading in the opacity change.

I'm also displaying a live viewer count which is done by a script on my server

tailing the rtsp-simple-server log file and making a rough count of viewers

based on unique IPs that have fetched a video chunk within the past 30 seconds.

It writes this count to a text file that some Javascript fetches every 10

seconds when the video is on and then updates the view counter under the screen.

It would have been nice if rtsp-simple-server could do this rough viewer count

calculation on its own and send the count as some kind of metadata in the HLS

playlist, so I could avoid the additional server process and each client having

to fetch this counter separately.

Chat

One component of services like YouTube or Twitch that I did want is integrated chat. Users can send comments or questions about what's going on on screen and I can respond either in chat or by showing something on the stream.

Since I already have an

IRC channel

for a community based around my other videos, I just used Libera Chat's

Javascript chat widget in an <iframe> to allow users to join right from the

stream page.

Of course, for users that don't want to chat in the browser, they can just join

in a normal IRC client and still interact with me and other viewers, something

that is not (easily) possible with a YouTube or Twitch stream.

I can also be in the IRC channel from my Mac with the

IRC client

I just wrote for System 6.

Casual Broadcasting

My usual videos require an iPad on my desk in between my Mac and me† to film from both cameras at once which sort of "detaches" me from experiencing the Mac and really getting into the groove of programming since I have to do everything looking through the iPad's screen. They also require an hour or more of my undivided attention when it's quiet enough in my house to record, and then another hour re-encoding the video, then maybe another of editing the automated closed-captioning files.

Since my live-streaming setup only captures my Mac's screen and no audio or video of me, it allows me to broadcast on a whim without having to worry about what I look like at the moment, what noise is being made elsewhere in my house (usually my child running around) or what music I'm playing on my laptop, and I can get up from my desk whenever I need to for short amounts of time.

I may add an optional small video and audio stream of myself separately on the page in the future, but for now I like that it's just an ephemeral stream of my Macintosh screen.